Today I found myself using public transport to get to work

for the first time. Although I have a 62km road trip to get from my home in

Edenvale, near OR Tambo International Airport, to the CSIR in Pretoria, it

usually takes me no more than 45 minutes. This is because I travel almost the

entire distance on the R21 highway, which is hardly ever slow in the direction

I’m going as I’m travelling against traffic.

|

| Gauteng's Gautrain - www.gautrain.co.za |

But this week things changed. The etolling system came into

effect, and the additional cost that this now adds to my journey now makes the

Gautrain combined trip to work cheaper than driving my personal vehicle. Under

the current petrol price, my monthly budget to get to work is about R1500. But with

the addition of toll roads, which leads to an additional cost of R240, my

budget now needs to be increased to

R1740. With petrol prices set to increase and the deteriorating South African

rand, this amount guaranteed to increase over time.

If I were to take the Gautrain, and taking into account the

average number of days I’m in the Pretoria office during a month, the cost for the train

trip would be R1272, the cost for parking would be R180 and the cost of the bus

would be R132, totaling R1584. If I take into account that I’m only going to

be travelling one tenth of my normal monthly road travel by personal vehicle,

it means that my petrol to get to work will be approximately R150. Which makes

the total cost of my Gautrain trip R1734.

So we’ve established that the Gautrain combined trip is marginally cheaper, and may be more cost effective in the future, but what about the paramount reason for using public transport –

mitigation of climate change? Does this choice of transport reduce my own

person carbon footprint?

I was particularly concerned because when I started my last

leg to work, the bus ride, there were only five people on the bus for the entire

length of the journey. Is my personal footprint really going to be reduced if

I’m using underutilized public transport? So I decided it was necessary to do

the calculation and see for myself.

To start with, what is the amount of carbon dioxide emitted

if I travel in my personal vehicle to work? For one journey to work, this is a

62km road trip. Using the guidelines for mobile emissions published by Defra, a

medium passenger vehicle emits approximately 186.8 g of CO2 per km traveled.

Therefore, for my 62km journey, I’m looking at emitting an astronomical 11.58 kg

of carbon dioxide, or 0.01158 tons of carbon dioxide.

Now let’s compare that to the combined Gautrain journey to

work. To start the journey from home, I have to travel 8.3km by personal

vehicle to get to the Rhodesfield Gautrain station. That means I’m going to

emit approximately 1.55 kg of carbon dioxide, or 0.00155 tons of carbon

dioxide.

The next leg of the journey is

the train trip from Rhodesfield station to Hatfield station. This represents

27 minutes of travel time. The Gautrain, on a typical four car train, has twelve

200 kWatt motors. That’s a combine value of 2400 kWatts or 2.4 MWatts. Taking into

account the 27 minutes, or 0.45 hours, travel time, the MWatt hours are 1.08

MWatt hours.

The Gautrain is running off coal powered electricity.

The EPA approximates that a coal fired power station emits 1.020129 tons of carbon dioxide per MWatt hour. This means that the total amount of carbon dioxide emitted from

my train leg would be 1.101739 tons of carbon dioxide. Now that’s for the

entire train. A four car train can hold 321 seated passengers and 138 standing

passengers. When I got on the train this morning, it was reasonably full at

about 75% seated occupation. This is approximately 241 passengers. The

emissions need to be divided by the number of passengers, to get the emissions

per passenger. This works out to be 0.00457 tons of carbon dioxide per passenger.

The last leg of my journey is the bus trip. Using the same

Defra guidelines, the emissions from a bus are approximately 1034.61 g of

carbon dioxide per km traveled. Therefore, for my 7 km bus trip, the total

emissions would have been 8.587 kg of carbon dioxide, or 0.008587 tons of

carbon dioxide. Again, this has to be

divided by the number of passengers using the bus. There were only five people

on the bus, so the total amount of emission per passenger was 0.001717 tons of

carbon dioxide.

The total carbon dioxide emission for the entire journey is

then 0.007844 tons of carbon dioxide. This is only 67% of the carbon emissions

that I would have emitted if I had traveled by my own personal vehicle.

|

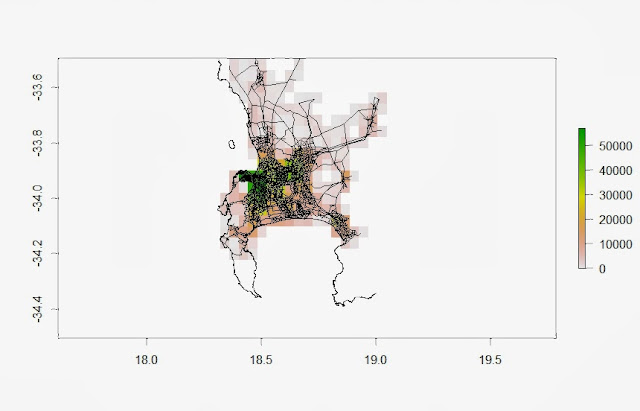

| Carbon dioxide emission (tons) depending on passenger composition |

So as far as carbon dioxide emissions go, public transport is a sure winner. The biggest draw back was the amount of time it took to get to my office, versus the normal 45 minute journey if I took my own personal vehicle. It took almost two hours for me to get to my office. The major cause of the long journey time was waiting for the next train (I managed to just miss my connecting train) and waiting for the bus to leave. This probably added at least half an hour onto my journey time. The other additional leg of my journey is the 20 minute 1.5 km walk from the bus stop to my office. Now I can probably do with the walk, but it certainly adds to the length of the journey, and doesn't do much to help my body odour during the course of the day.

So to make the Gautrain journey to work feasible, I need to learn to be productive on the train and on the bus. But it's not too difficult because after all, I wrote this blog while sitting on the train. There are plenty of activities that I would normally have done at the office, which I could do during the journey, such as reading papers, writing out ideas, or checking emails. But it will require a mind set change to be really successful at this.

So for the time being, while my ire about the etolls is still a strong motivation, I will continue to use the Gautrain and make a concerted effort to adapt to a new way of traveling.